Your First Experiment¶

Get started with your first Iter8 experiment by benchmarking an HTTP service.

1. Install Iter8 CLI¶

Install the latest stable release of the Iter8 CLI using brew as follows.

brew tap iter8-tools/iter8

brew install iter8@0.10

Install the latest stable release of the Iter8 CLI using a compressed binary tarball.

wget https://github.com/iter8-tools/iter8/releases/latest/download/iter8-darwin-amd64.tar.gz

tar -xvf iter8-darwin-amd64.tar.gz

darwin-amd64/iter8 to any directory in your PATH. wget https://github.com/iter8-tools/iter8/releases/latest/download/iter8-linux-amd64.tar.gz

tar -xvf iter8-linux-amd64.tar.gz

linux-amd64/iter8 to any directory in your PATH. wget https://github.com/iter8-tools/iter8/releases/latest/download/iter8-linux-386.tar.gz

tar -xvf iter8-linux-386.tar.gz

linux-386/iter8 to any directory in your PATH. wget https://github.com/iter8-tools/iter8/releases/latest/download/iter8-windows-amd64.tar.gz

tar -xvf iter8-windows-amd64.tar.gz

windows-amd64/iter8.exe to any directory in your PATH. Install the latest stable release of the Iter8 CLI in your GitHub Actions workflow as follows.

- uses: iter8-tools/iter8@v0.10

2. Launch experiment¶

Use iter8 launch to benchmark the HTTP service whose URL is https://httpbin.org/get.

iter8 launch -c load-test-http --set url=https://httpbin.org/get --set numRequests=40

The iter8 launch command downloads Iter8 experiment charts, combines a specified chart (load-test-http in the above instance) with various parameter values (url and numRequests in the above instance), generates the experiment.yaml file, runs the experiment, and writes results into the result.yaml file.

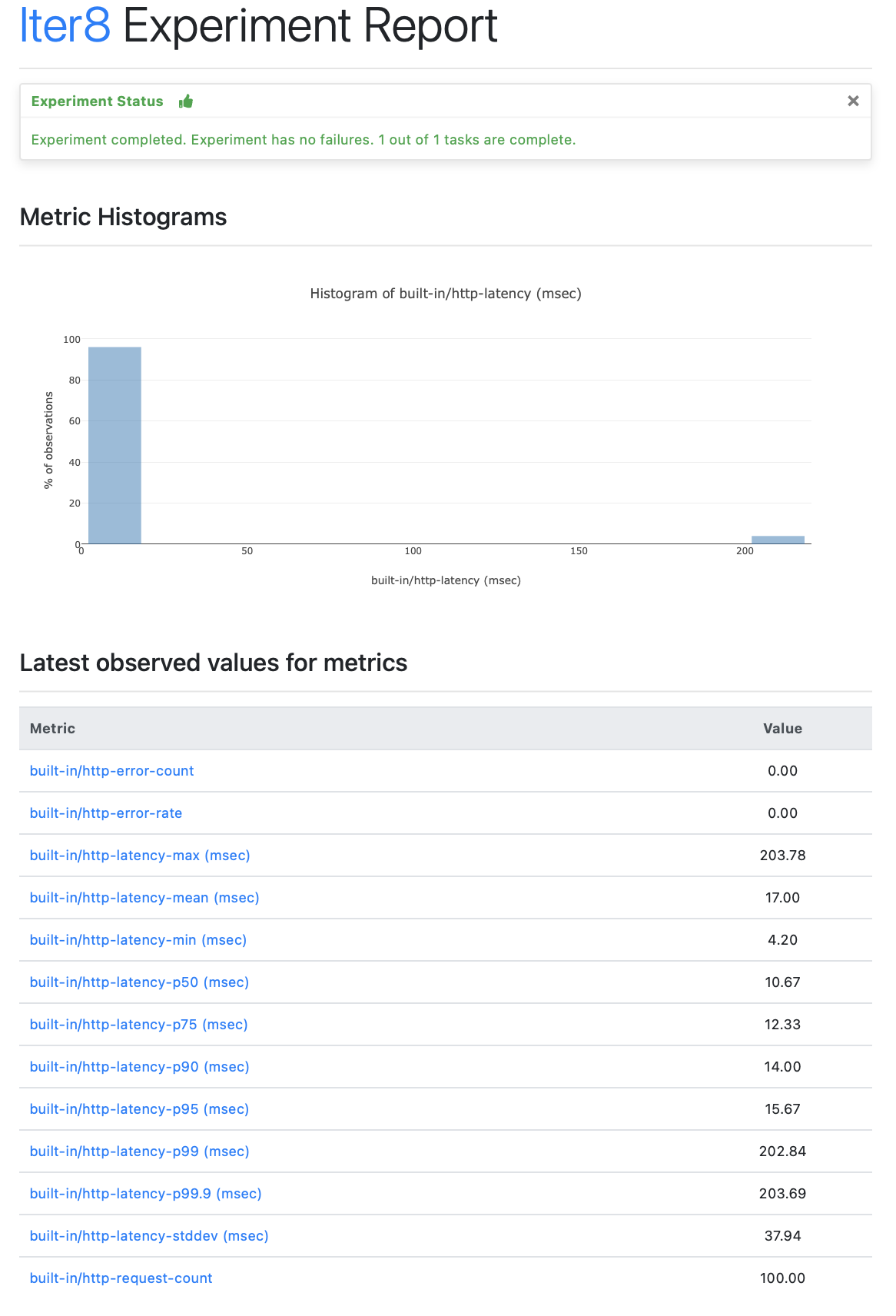

3. View experiment report¶

iter8 report

The text report looks like this

Experiment summary:

*******************

Experiment completed: true

No task failures: true

Total number of tasks: 1

Number of completed tasks: 1

Latest observed values for metrics:

***********************************

Metric |value

------- |-----

built-in/http-error-count |0.00

built-in/http-error-rate |0.00

built-in/http-latency-max (msec) |203.78

built-in/http-latency-mean (msec) |17.00

built-in/http-latency-min (msec) |4.20

built-in/http-latency-p50 (msec) |10.67

built-in/http-latency-p75 (msec) |12.33

built-in/http-latency-p90 (msec) |14.00

built-in/http-latency-p95 (msec) |15.67

built-in/http-latency-p99 (msec) |202.84

built-in/http-latency-p99.9 (msec) |203.69

built-in/http-latency-stddev (msec) |37.94

built-in/http-request-count |100.00

iter8 report -o html > report.html # view in a browser

The HTML report looks like this

Congratulations! You completed your first Iter8 experiment.